There are a lot of ways to cache data. You can cache a piece of data, a query, a page fragment, an entire page, or an entire website.

You can cache to local memory, local file storage, distributed memory, distributed file storage, a front cache, or a Content Delivery Network (CDN).

You can cache for ever, until the process regenerates, 5 years, 5 months, 5 days, 5 hours or 5 minutes. Heck, it might even, depending on the system, make sense to cache something for 5 seconds. Maybe less.

Why would I cache something for 5 seconds?

I know, I know, it seems silly to cache something for 5 seconds. You probably think this is a silly attempt at a ridiculous headline to grab clicks. However, let’s explore.

To get much benefit from caching, cache the content longer than the service time. The service time is the total amount of time it takes to service the request and return the desired item. As an example, if a piece of content takes 5 seconds to generate, the service time is 5 seconds. To get any real benefit, we should cache the content for longer than 5 seconds.

What happens if the service time is longer than the cache time?

If the service time is longer than the cache time, requests for the piece of content will queue. With caching, we want to AVOID queuing, so it’s important to know the service time of the call under a variety of circumstances. You mathematical types can read up on Little’s law, if you are curious: http://en.wikipedia.org/wiki/Little%27s_law

A practical example

Now, most cachable content has a service time of less than 5 seconds. Let’s talk about what would happen in 2 identical systems. To make things simple, we’ll pretend the following:

- There is only one process

- The service time of the process is 1 second

- The request levels are 1, 5, 15 and 30 requests per second.

- The non-cached system is real time, the cached system is cached for 5 seconds

In the non-cached system, here’s how the requests per second (RPS) would look during 1 traffic hour:

- 3,600 @ 1 RPS

- 18,000 @ 5 RPS

- 54,000 @ 15 RPS

- 108,000 @ 30 RPS

WOW! I bet we’d have some major problems in a real time system under these conditions.

Let’s compare with the system using a 5 second cache:

- 720 @ 1 RPS

- 720 @ 5 RPS

- 720 @ 15 RPS

- 720 @ 30 RPS

Hmm, that looks odd. The requests in the 1 hour period never get over 720. Seems like an insurance policy against load.

Let’s look at the amount of requests we save at each of the levels:

- 3,600-720=2,880 @ 1 RPS

- 18,000-720=17,280 @ 5 RPS

- 54,000-720=53,280 @ 15 RPS

- 108,000-720=107,280 @ 30 RPS

Wow! By caching for 5 seconds, we saved between 2,800 = 107,280 requests per hour.

What’s more interesting we can see we established a service ceiling for our system. We’ll never generate more than 720 requests an hour. No matter how many times the link goes viral on www.FunnyCats.com.

As traffic rates increase, the value from a 5 second cache also increases. In a world full of email blasts, viral links, email, IM, social media, we see more and more bursts of traffic. As traffic bursts, we approach the natural threshold of a system. A system can only go as fast as the slowest part, (http://en.wikipedia.org/wiki/Amdahl%27s_law) so we need to make sure the slowest part is good enough for what business problem we are trying to solve.

So should we cache everything at 5 seconds?

A 5 second cache isn’t the answer to every problem though. Some content can’t be cached at all. Like a shopping cart. Some content can be cached forever, like static content (named with a version number). Some content types do not seem cachable, but maybe could possibly be.

An example would be a page listing inventory. Perhaps the business is able to fulfill limited quantities of out of stock items. Maybe the products don’t change often and come in all the time. In this case, it might be ok to cache inventory for 5 seconds and handle out-of-stock items by delaying shipment. The right answer depends on the business problem and the constraints on solutions. Which is a better problem to have, a down website, or a few out-of-stock orders to deal with?

Just for fun, let’s look at the difference in caching for 5 seconds and caching for 5 minutes over 1 traffic hour:

- 720-20=700 @ 1 RPS

- 720-20=700 @ 5 RPS

- 720-20=700 @ 15 RPS

- 720-20=700 @ 30 RPS

The new service ceiling is 20 requests per hour. This is better than 720, for sure. However the savings aren’t as dramatic as the no-cache to 5 second cache example. The reason why this is important is because we must balance the needs of the business with the needs of the system serving the business needs. Maybe the business can’t afford to have information stale for 5 minutes, but 5 seconds is a reasonable level. You’d only have to have your system able to support 720 hourly requests, versus 108,000. You can get away with a lot more at the 720 RPH (requests per hour) level than you can at 108,000 RPH.

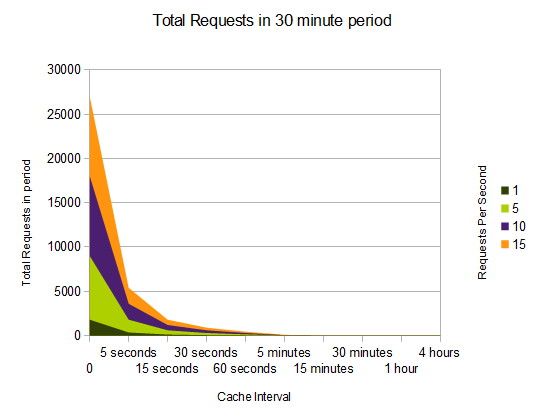

I put together some charts showing the point of diminishing returns on longer cache intervals. The first chart shows the total number of requests in a 30 minute period at different traffic level times. Note the 5 minute period as the point in which there is no benefit visible on the chart.

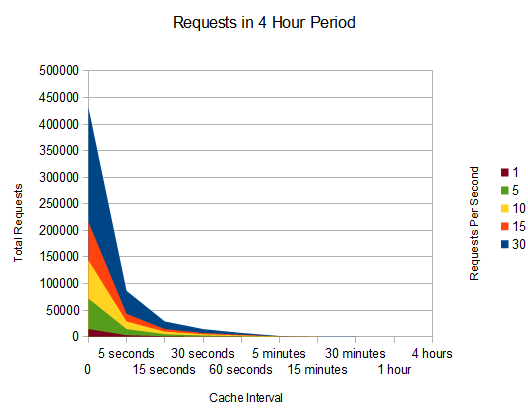

The second chart shows the total number of requests in a 4 minute period at different traffic level times. Note again, the 5 minute period as the point in which there is no visible benefit, considering the starting point.

The answer is, as always, “It Depends”

The right time to cache a piece of content really depends on all the elements in the equation. Caching, even in non-intuitive ways, can be used to solve business problems within the available solution constraints.